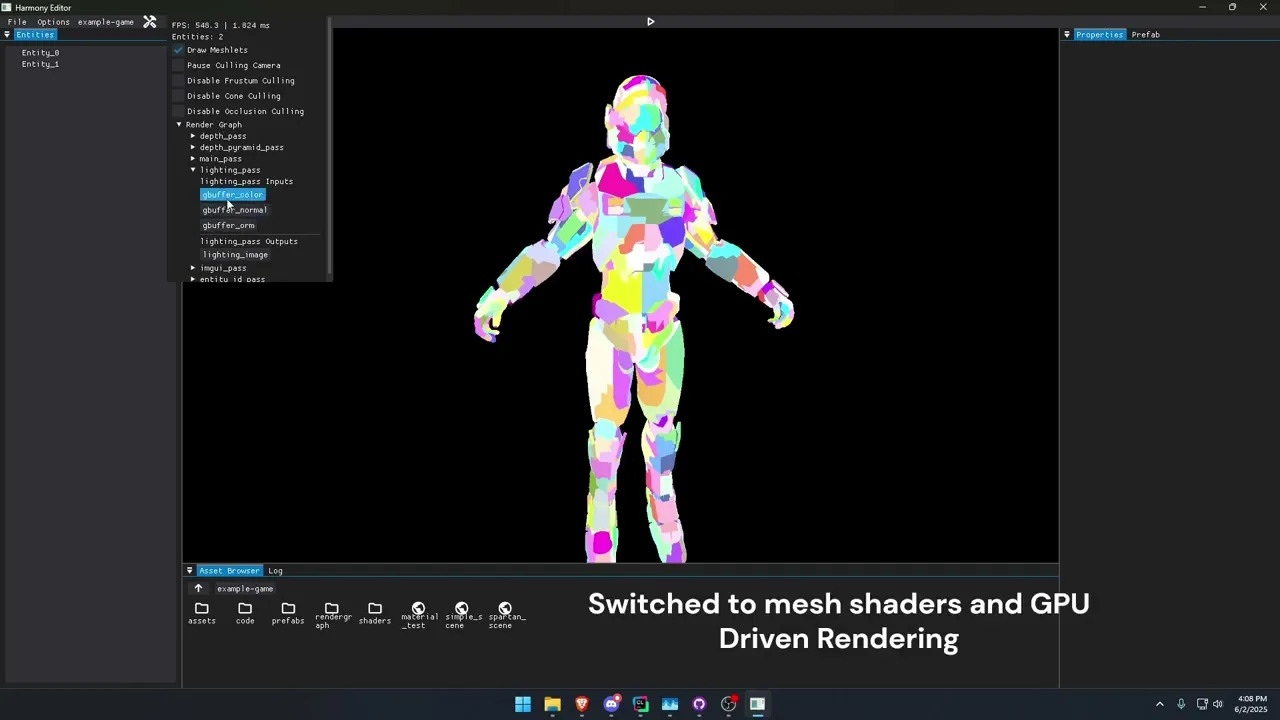

Making an easily extendable GPU Driven Renderer in Vulkan

Looping over thousands of entities and generating draw calls every frame turns out to be pretty expensive, what if the GPU did most of the work instead?

In the past, draw call generation was typically a major bottleneck for game renderers, and is done on the CPU where ideally you want as much frame-time budget as possible for gameplay systems and things like physics. The obvious solution here is multithreading draw command generation, but this is still not efficient and creates a slew of other problems.

What are draw calls?

Draw calls are how you submit work to the GPU using a graphics API. The easy way to set up a game renderer, is to have the renderer loop every entity in the scene, grab the mesh and material, and send draw calls to the GPU every frame. This obviously scales horribly with large scenes.

Thankfully, modern graphics APIs introduced MultiDrawIndirect commands, this lets us simply send a huge buffer of draw commands to the GPU, and then tell the GPU to execute all of these draw commands. Still, this provides no performance benefit if we are just looping every entity in the scene, every frame, and sending them to the GPU.

I decided to implement a system similar to what Remedy employed in their engine for Alan Wake 2.

Instead of looping over every entity in the scene every frame, we generate draw calls for a mesh when it is added to the scene, these are stored in multiple arrays, called 'buckets'. We have different buckets to separate meshes by shader, and draw order, for instance we have an opaque mesh bucket, a transparent mesh bucket, and an alpha tested mesh bucket.

Every frame, we just upload all the data from these arrays over to the GPU, and call vkCmdDrawIndirect. This makes the time spent on the CPU every frame nearly consistent regardless of scene size. In my renderer, the CPU time is less than 1ms even in a scene with thousands of meshes and it runs on just a single thread.

This brings up the question of how to do culling? If we want to have the CPU do as little work as possible, then the GPU needs to be sent the entire array of meshes in our scene, even if most of them aren't visible. This leads us to compute and mesh shaders.

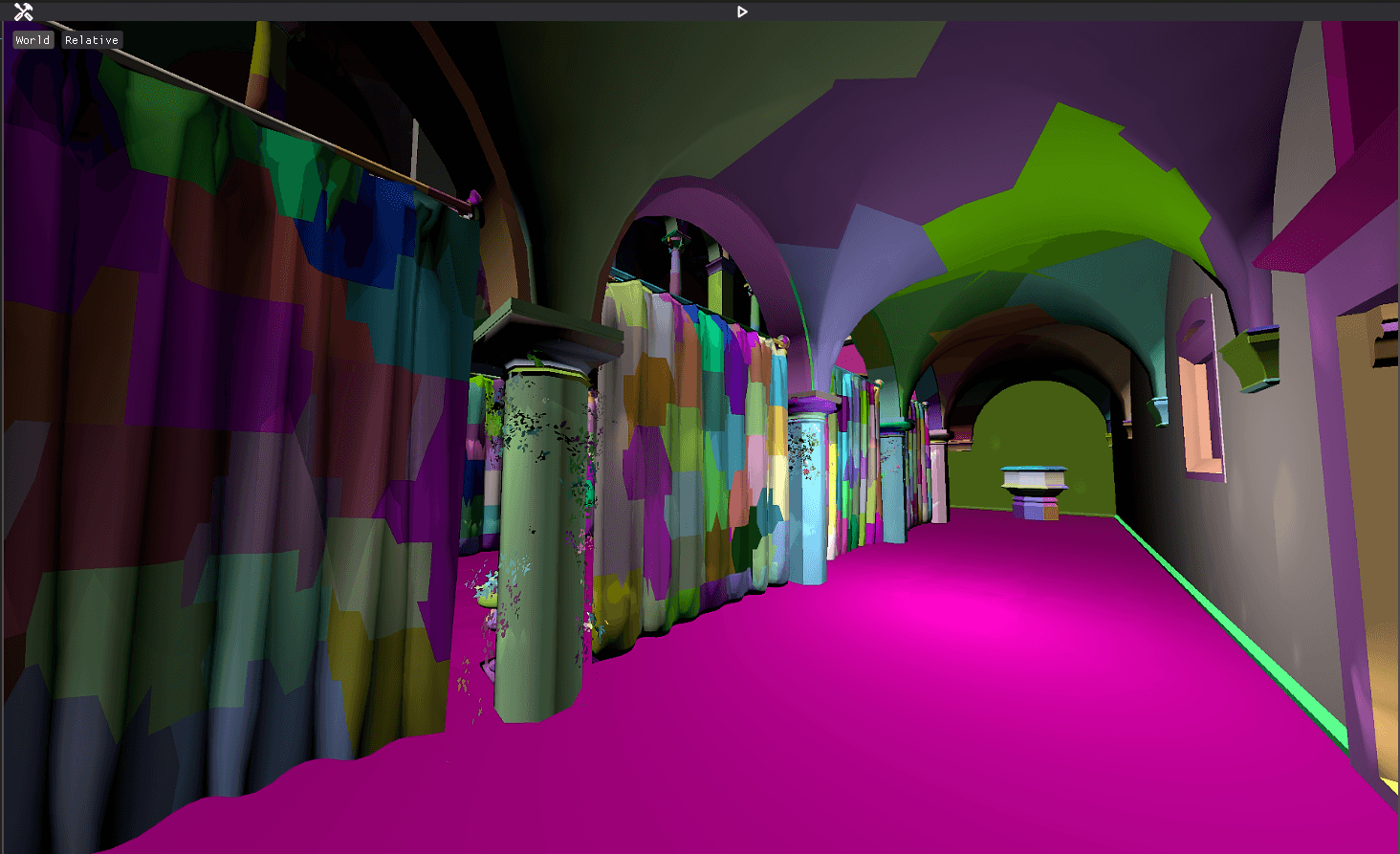

There are multiple ways we can do GPU side culling in a compute shader, for instance each draw commands can contain a bit for whether it is culled or not, and the compute shader can set the cull value based on coarse frustum and occlusion culling done in the compute shader, or the compute shader can just populate its own draw command arrays. Personally I am not using a coarse compute culling pass in my renderer yet, I am just using mesh shaders to cull at the meshlet level.

Mesh Shaders

Mesh shaders are a relatively new feature of modern GPU hardware, mesh shaders completely replace the standard rendering pipeline, composed of many different shader stages like Vertex, Geometry, Pixel shaders etc. with just Task shaders, Mesh shaders, and Fragment shaders.

Task and Mesh shaders work very similarly to compute shaders, but they have the ability to output completely custom geometry to the fragment shader, this means they can do everything Vertex, Geometry, and Tessellation shaders could do all from one shader.

This also means we can very easily just do frustum and occlusion culling from inside the mesh shader.

Mesh shaders are used with meshlets. The idea being that you split a mesh into many little pieces when it is loaded into the engine, and each mesh shader invocation is responsible for handling one meshlet, this allows us to do very granular culling, and GPU side Level of Detail computations. This can greatly improve performance in scenes with billions of triangles.

Now that is the GPU driven rendering covered, but especially in vulkan managing all this is still quite verbose, so I decided to implement a custom render graph to drive my renderer.

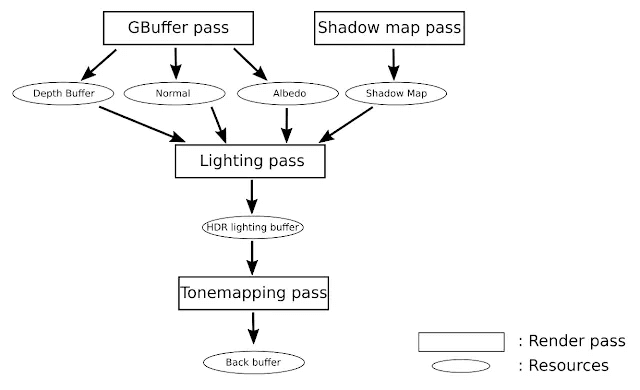

A render graph is a directed acyclic graph that orders how a scene should be rendered

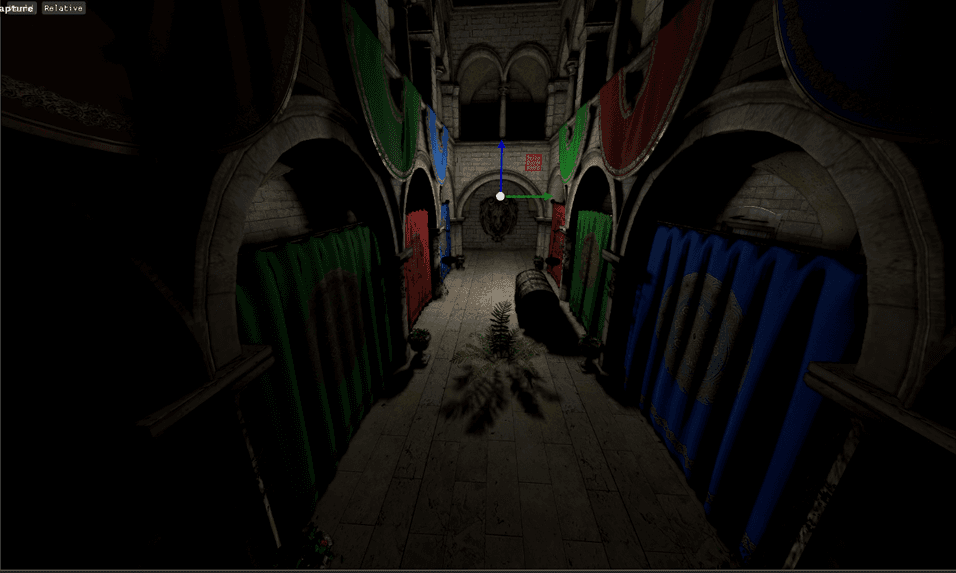

My render graph is still quite primitive, it reads a bunch of JSON files from a subdirectory in the game folder and organizes them into a graph, based on their inputs and outputs

It then does a depth first search over the graph which gives us proper scene order. This allows the render graph to set dynamic states needed for rendering each pass, without having to hardcode a bunch of different functions for each render pass. Still we do need custom code sometimes so the rendergraph is setup to use std::function, and we can set custom PreRender, Render, and PostRender functions for each pass when necessary

This also allows us to easily keep track of what resources each pass use, since the rendergraph handles resource creation

My render graph still doesn't handle barriers or resource aliasing, but I will definitely need to add that eventually. This is good enough for now so I can work on making pretty pixels show on the screen.